Intro

The internet is chock full of information, and often times there is a need to aggregate it in order to gain insights through additional analysis. To that end, scraping information from the internet can become somewhat reptitive.

Here we’ll explore a simple approach to automating part of the process of writing the code to retrieve information from the web.

Overview

With the bulk of the internet transitioning to more decoupled models we are seeing more and more API’s. This is great on lightening our workload since we wont have to parse HTML to retrieve the information we need.

At the same time, it gets a little repetitive writing out our structs and requests. After all, data structures and requests are ubiquitous, surely there is a quicker way to do this too?

Fortunately it seems some wise person had a similar thought and went to the effort of making a simple site to automate the creation of the struct as well as the code for the request in Golang using "net/http".

The economy is topical right now, so to simply demonstrate this process we’ll scrape NASDAQ and send the ticker quote to slack.

Methodology

Before we get started, lets lay out a plan of attack for composing our program.

We’ll identify the JSON with our information, generate a struct to model it, generate the request code from cURL, then add logic to unmarshal the JSON and shoot it back to Slack on command.

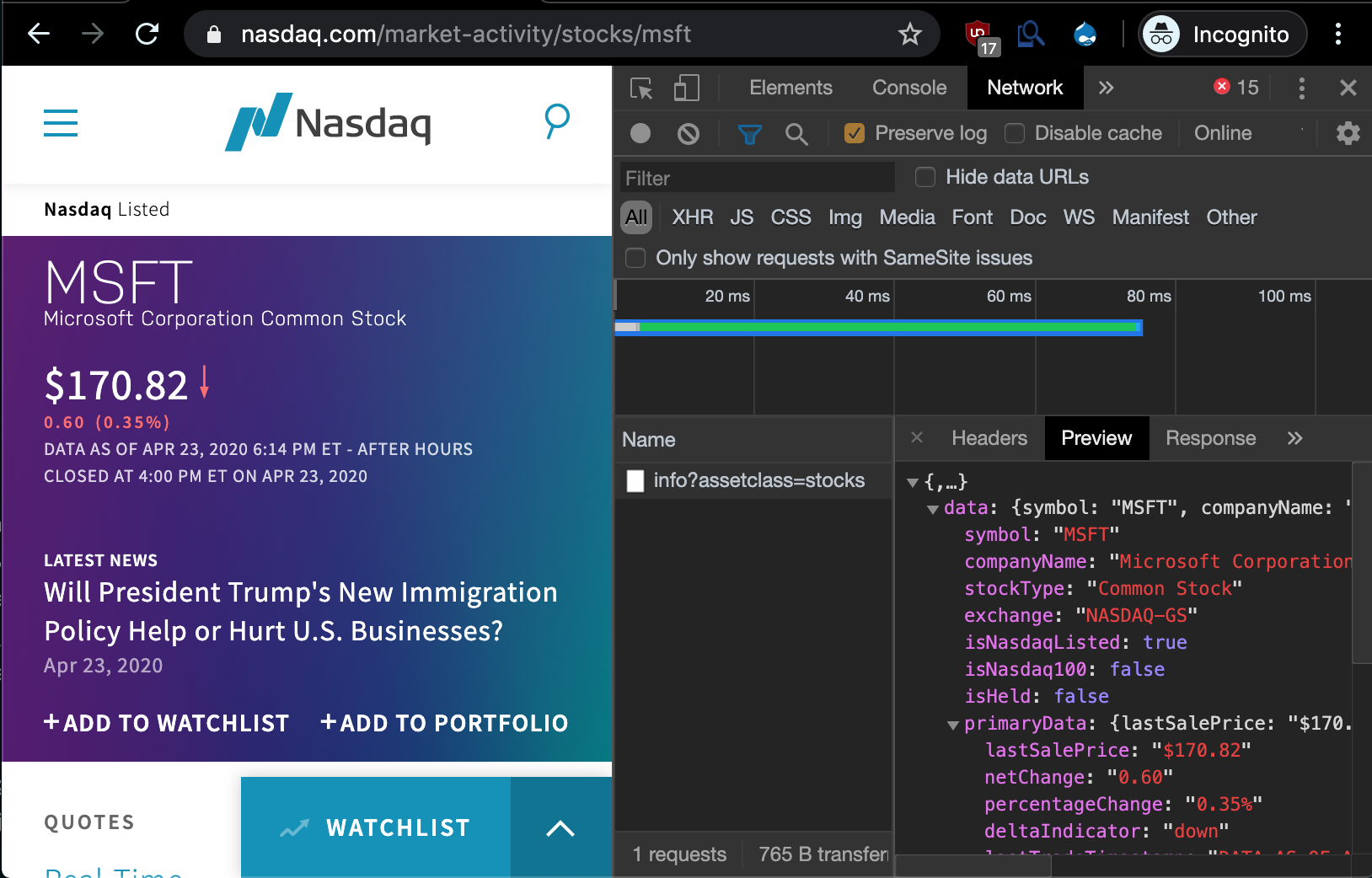

First we’ll want to head on over to the NADAQ site, browse to a stock and pop open the developer tools network tab.

Immediately we see what looks like the page making a request to subdomain, api.nasdaq.com.

While some sites still render templates or PHP, alot of modern web apps parse JSON from an API and fill the data into the page.

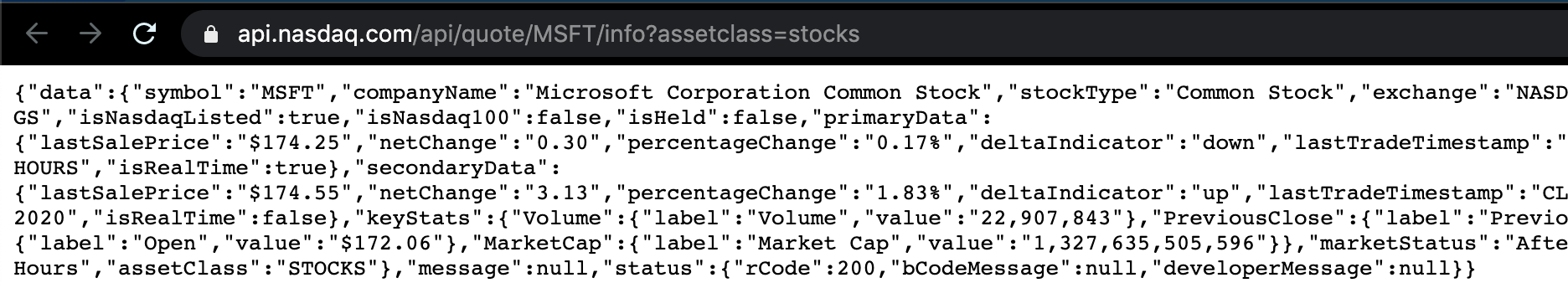

If you directly visit the API subdomain, you’re greeted with just the bare JSON object.

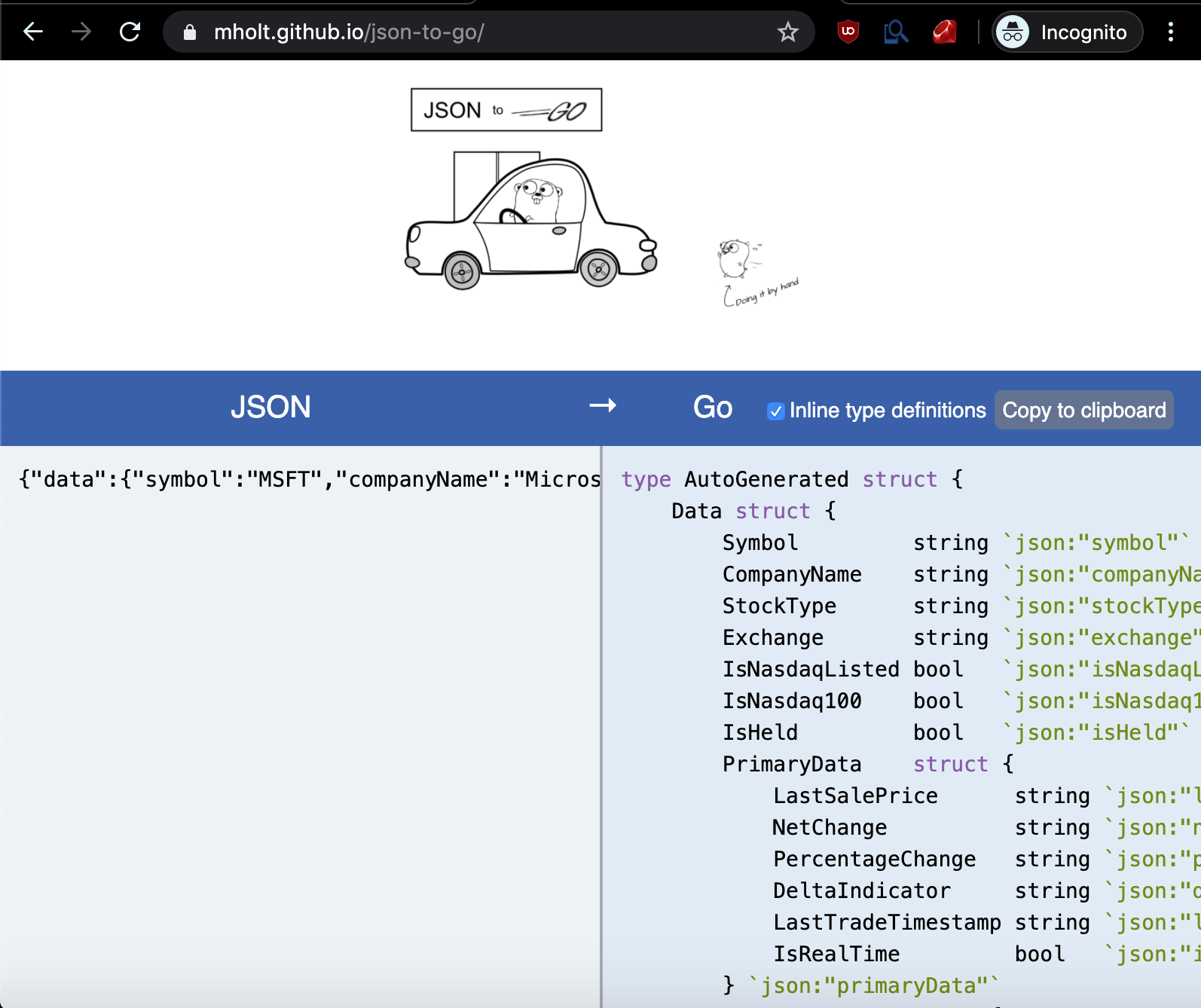

We have our JSON, so now what? Drop it into the JSON to Go converter

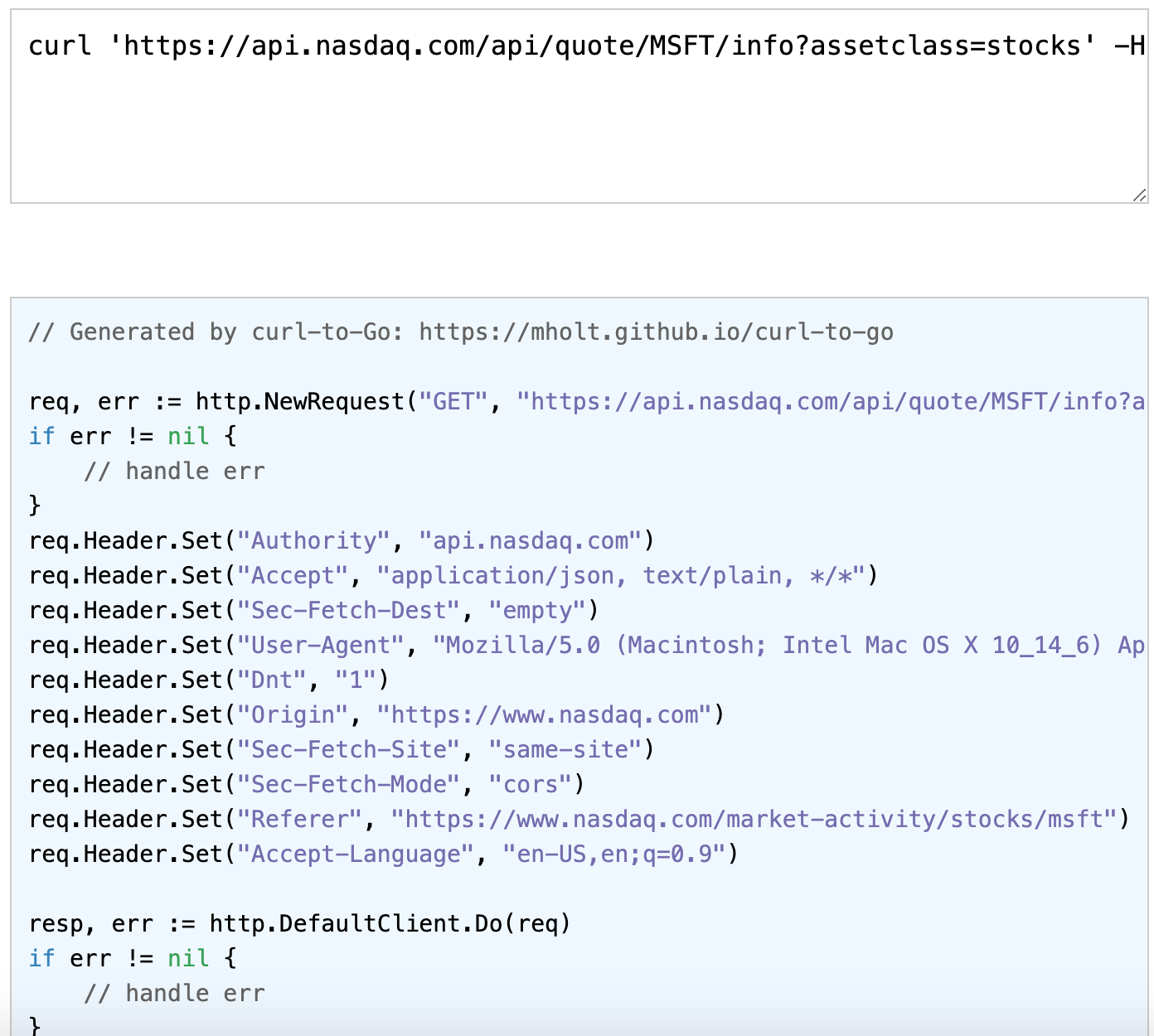

Next up we’ll basically repeat the above process but with the HTTP request that yielded that JSON. Just right click on the request in the Network tab of developer tools and select

Copy as cURL.

Drop it into cURL to Go.

Be sure to strip authorization tokens and other such sensitive things.

There we go, most of the tedious work is done. All thats left for this part is to add logic to unmarshal the response and return a formatted string.

The cURL to Go generator generates a nice if resp.StatusCode == http.StatusOK {} for us to drop our logic into.

|

|

Then we add the Slack logic to start a http handler that receives the ticker from Slack, calls our GetTicker function, and returns the information back to the user.

|

|

Conclusion

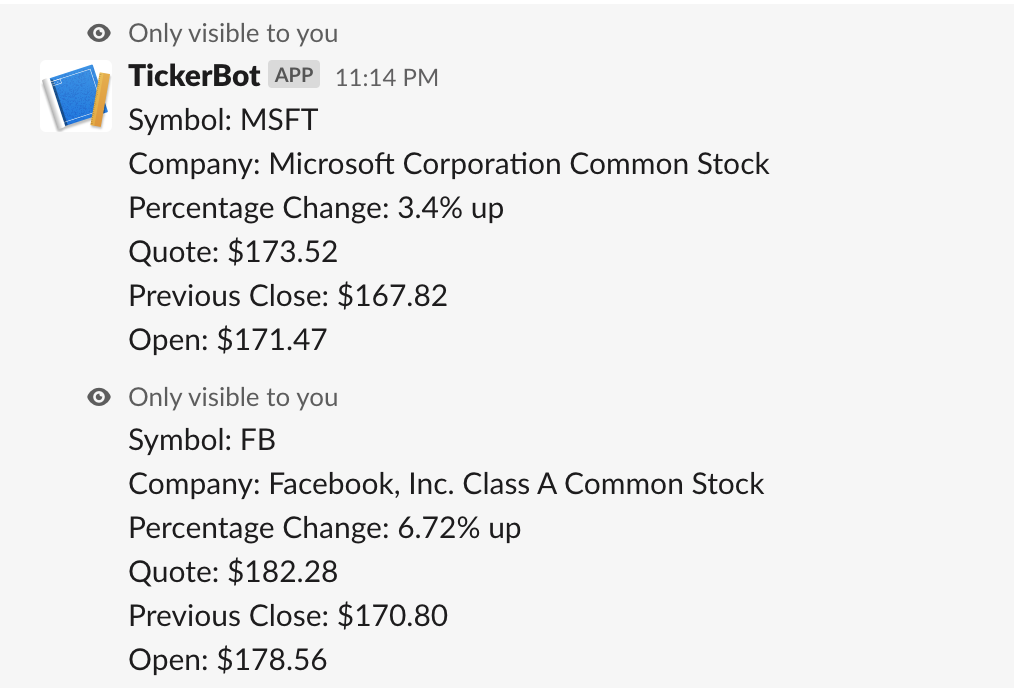

Put it all together and with minimal effort we have ourselves a Slack command that automatically retrieves stock data for us.

The results of

/tickerbot MSFTand/tickerbot FBlook something like this:

There’s generators for other languages such as Python, I personally find it irritating to pip install requests, whereas with Go it’s already included. Not to mention the whole portability of the static compiled binary at the end.

While not the most complex or ground breaking exercise, it’s still a fun exercise nonetheless.

Practicing coding and methodically breaking down problems into steps is always great practice.