Intro

In 2019 I had my first opportunity to drive a Tesla Model 3. This cemented in my mind that it was a car years ahead of the automotive industry, and through technical analysis others seem to agree. Despite this I didn’t think to buy any stock, shortly after driving the car it shot up from $330 to over $900. This event fostered a deeper interest in finance, eventually resulting in this post.

While reading through some forums I saw a user lamenting how they would like a gauge of market sentiment.

So how hard is it for us to scrape social media and have AI/ML tell us how everyone’s feeling?

Overview

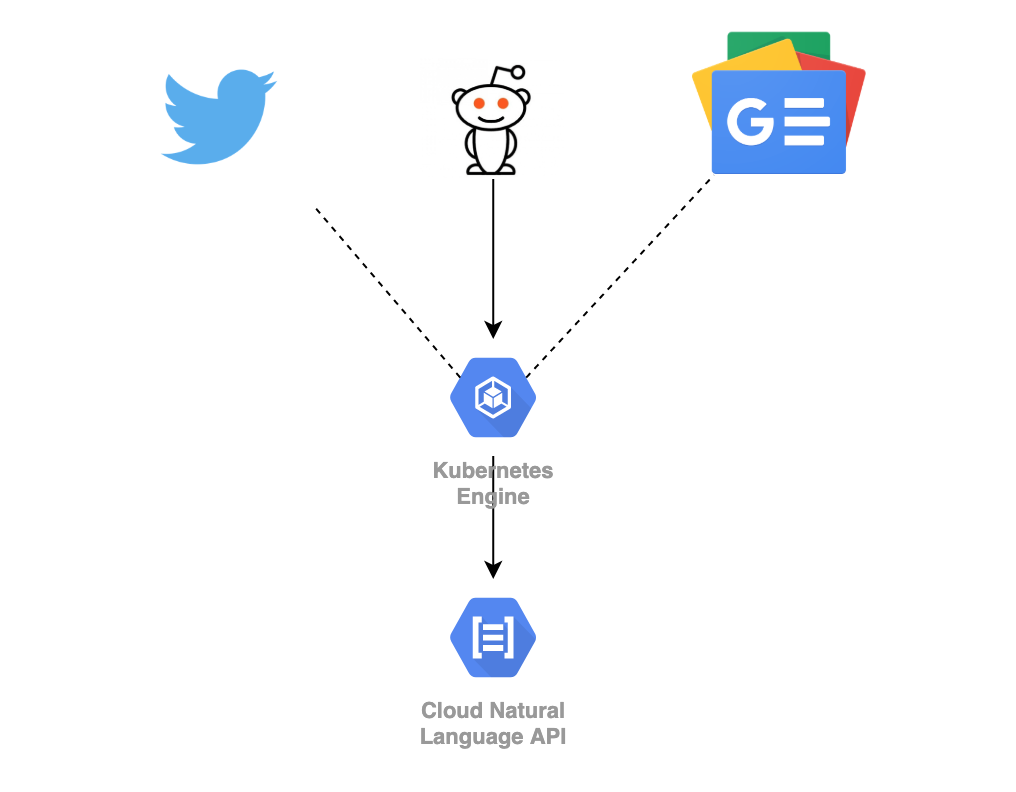

Let’s think of what is needed to accomplish this goal, mainly an AI/ML that does sentiment analysis, and something to scrape social media posts for processing.

For something this simple theres no need to build our own AI/ML, so lets get Google’s Natural Language Processing API to do the heavy lifting for us. If our needs were more targeted we would cant to create our own model and train it against our AI/ML.

Next lets use Golang Reddit API Wrapper (GRAW) to harvest posts from Reddit for analysis.

But what if we wanted to scrape other sites, like Twitter or news articles? We would need to decouple the code that does sentiment analysis from the harvesting code.

So we’ll just start off decoupled by implementing our logic with a Client-Server GRPC model. GKE is used here as this code is all made with the thought of being containerized and ran in Kubernetes upon deployment.

Methodology

First identify what sort of data we’ll be working with. GRPC perfectly lines up with this task as it defines data models using Protobuf3. GRPC will then generate the function stubs for our Client and Sever. This sounds way better than having to write API routes and simple things like GET or POST.

We’ll send our service a MediaPage data structure and it’ll send us back a MediaAnalysis.

From there the returned payload could be sent to a database for retention and future display, or sent off to an ETL for further kinds of analysis.

Here what the .proto file looks.

|

|

With this setup one can run protoc --go_out=plugins=grpc:. *.proto and out comes a .pb.go file with our function stubs.

With the core code generated the next step is to to setup a quick server.go and client.go.

For the server we just need to extend the Generate function outlined in the .proto and have it execute sentiment analysis before returning a MediaAnalysis.

|

|

This is made pretty easy as Googles NLP API also has a Protobuf structure for invoking it, allowing us to easily make the API call and pass the data similar to how it’s passed between our Client-Server services.

Now that we know what the server logic looks like, lets look at the client.

Harvesting Reddit posts is pretty easy.

All thats needed is to setup the logic to harvest posts using GRAW and then pack the data into the MediaPage Protobuf before sending it off to our server.

|

|

All thats going on above is calling the GRAW function to harvest some Reddit posts, then if it contains a keyword we care for, pack it into the MediaPage Protobuf.

Next send it to the server by setting up the GRPC client with our generated stubs, and then call the Generate function that was defined and extended earlier.

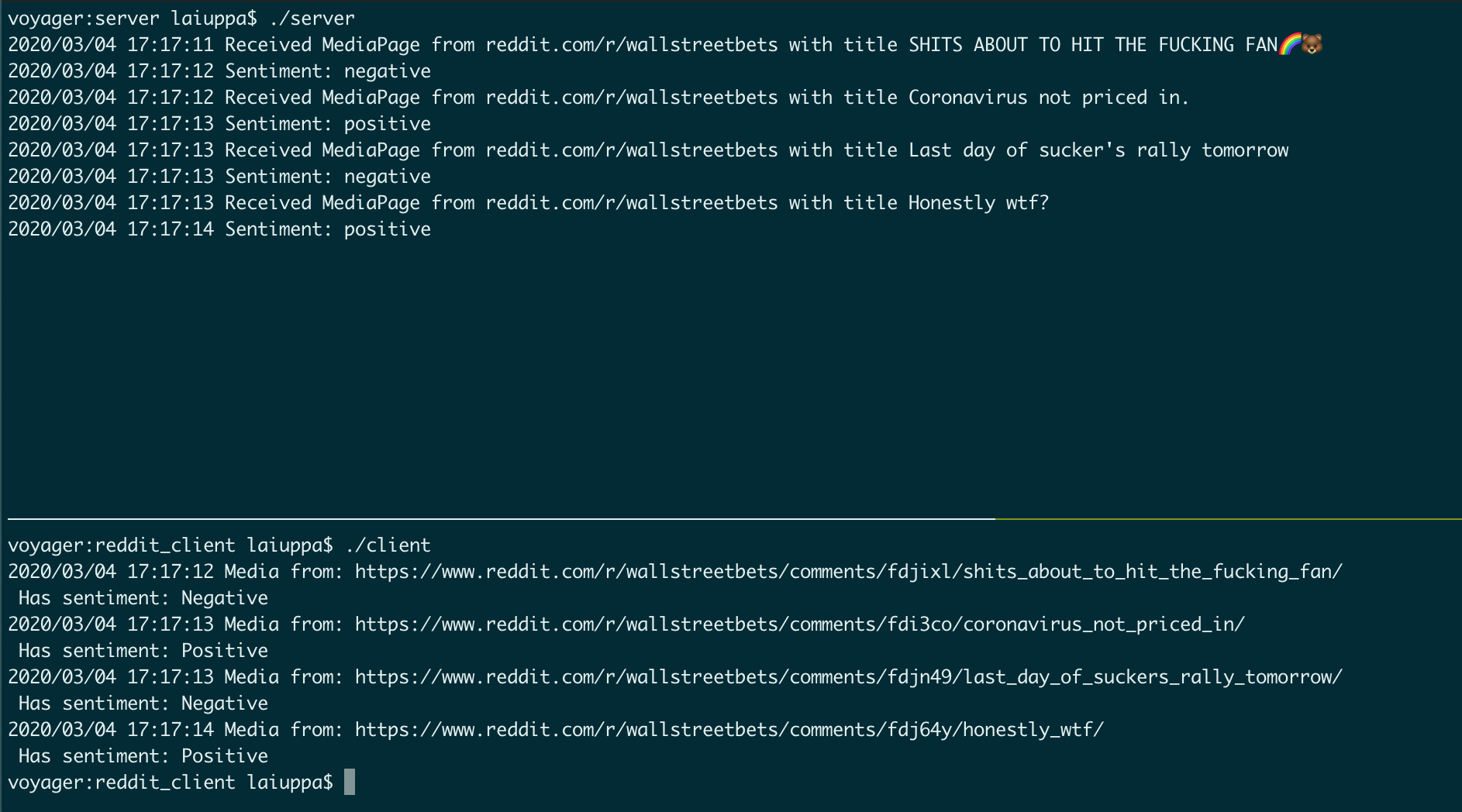

The final result winds up looking something like this.

Conclusion

Not only did Google’s NLP API make it dead simple to quickly get sentiment analysis, but GRPC made it easy to break things apart into a remote service model so our Reddit Harvester and processing logic were separate. There’s many benefits to this from a deployment perspective, but thats more for a DevOps oriented post.

With all of the options available in Cloud Platforms we can easily throw together systems like this in no time.

On top of this theres a multitude of other services you can roll into your intelligence gathering, such as Google Trends, historical stock data such as volume/OHLC, and maybe some reconnaissance tools to identify unusual options or visualize options data.

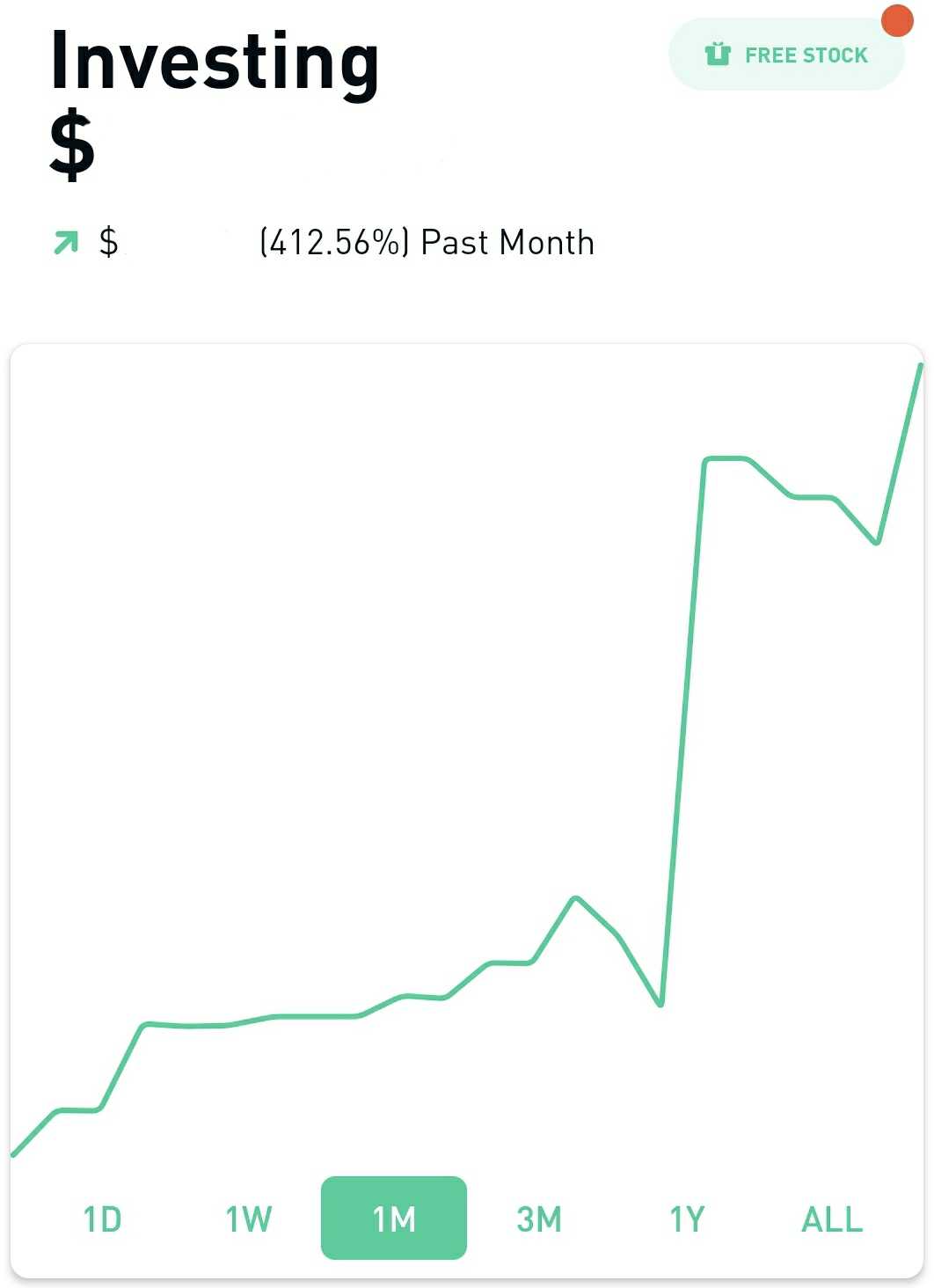

A month of studying and trading so far has yielded a 410% portfolio increase. Not too shabby for missing that great Tesla price surge.

Disclaimer, none of this is meant to be in any form financial advice or suggestions. What do you do with your investing is of your own volition and risk.